What is it? A cloud-based ETL, ELT and data-integration service for creating data-driven workflows to:

- Orchestrate data movement

- Transform data at scale

This allows us to reorganize raw data into meaningful data stores and Data Lakes.

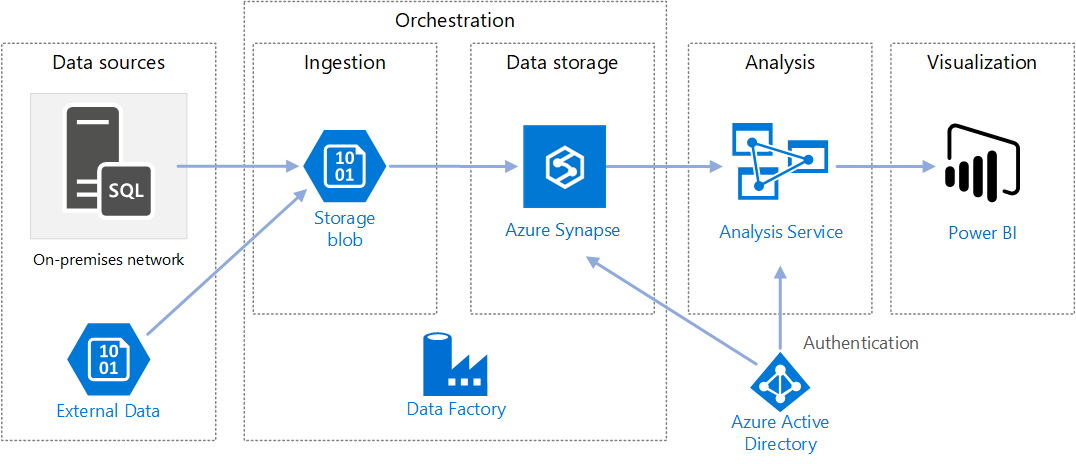

Sample ADF Implementation

Sample ADF Implementation

Benefits

Enables building complex ETL processes, transforming data visually, and publishing transformed data to data stores for business intelligence applications.

Functions

- Connect and collect

- Transform and enrich (Runs on SQL pools / apache spark)

- CI/CD and publish

- Monitor

Components

| Component | Description |

|---|---|

| Pipelines | A logical grouping of activities that perform a specific unit of work. These activities together perform a task. The advantage of using a pipeline is that you can more easily manage the activities as a set instead of as individual items. |

| Activities | A single processing step in a pipeline. Azure Data Factory supports three types of activity: data movement, data transformation, and control activities. |

| Datasets | Represent data structures within your data stores. These point to (or reference) the data that you want to use in your activities as either inputs or outputs. |

| Linked services | Define the required connection information needed for Azure Data Factory to connect to external resources, such as a data source. Azure Data Factory uses these for two purposes: to represent a data store or a compute resource. |

| Data flows | Enable your data engineers to develop data transformation logic without needing to write code. Data flows are run as activities within Azure Data Factory pipelines that use scaled-out Apache Spark clusters. |

| Integration runtimes | Azure Data Factory uses the compute infrastructure to provide the following data integration capabilities across different network environments: data flow, data movement, activity dispatch, and SSIS package execution. In Azure Data Factory, an integration runtime provides the bridge between the activity and linked services. |

When to use

- Handles large amounts of diverse data in disparate locations

- Lacks the coding resource to create code for data processing activities